Wish I had any idea what this was about

what exactly is your point? Also this is not a meme.

Edit: nevermind checked your modlog history you’re just racist

Show me as well

you been banned multiple times before because of your hate boner for China. Please go outside.

oh also Holocaust trivialization. God damn you suck.

Lol he’s reporting you for being a rule breaker, what an absolute piss baby

I am outside and there’s no hate. I support the people everywhere.

What is the purpose of attacking with fabrications which don’t match the modlog (context for the people’s court) or the screenshot?

Show it and I’m happy to correct any harmful behavior!

buddy you literally been banned for sinophobia and holocaust trivialization in at least three instances I am not here to argue with you and I doubt you are doing any self reflection based on your glib responses.

I have nothing to argue about. I’ll earnestly fix any mistakes. Honesty can go both ways if you’re willing.

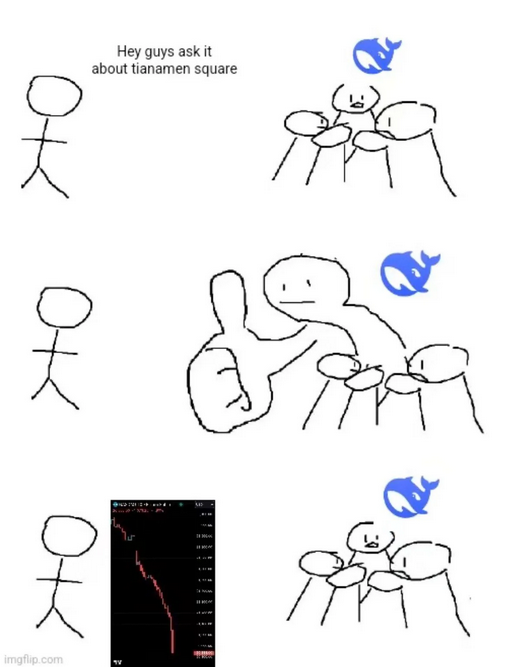

Context? Did someone leak DeepSeek’s system prompt?

Shared to an IM group from somewhere and reshared here. What I understood is it seems to be pretty open when asked how it comes to its answers

As implied above, the raw format fed to/outputed from Deepseek R1 is:

<|begin▁of▁sentence|>{system_prompt}<|User|>{prompt}<|Assistant|><think>The model rambles on to itself here, “thinking” before answering</think>The actual answer goes here.

It’s not a secret architecture, theres no window into its internal state ehre. Thi is just a regular model trained to give internal monologues before the “real” answer.

The point I’m making is that the monologue is totally dependent on the system prompt, the user prompt, and honestly, a “randomness” factor. Its not actually a good window into the LLM’s internal “thinking,” you’d want to look at specific tests and logit spreads for that.

“Reasoning” models like DeepSeek R1 or ChatGPT-o1 (I hate these naming conventions) work a little differently. Before responding, they do a preliminary inference round to generate a “chain of thought”, then feed it back into themselves along with the prompt and other context. By tuning this reasoning round, the output is improved by giving the model “more time to think.”

In R1 (not sure about gpt), you can read this chain of thought as it’s generated, which feels like it’s giving you a peek inside it’s thoughts but I’m skeptical of that feeling. It isn’t really showing you anything secret, just running itself twice (very simplified). Perhaps some of it’s “cold start data” (as DS puts it) does include instructions like that but it could also be something it dreamed up from similar discussions in it’s training data.

So it could be a hallucination, or just the skew of its training data then?

Im no expert at all, but I think it might be hallucination/coincidence, skew of training data, or more arbitrary options even : either the devs enforced that behaviour somewhere in prompts, either the user asked for something like “give me the answer as if you were a chinese official protecting national interests” and this ends up in the chain of thoughts.

I’m not an expert so take anything I say with hearty skepticism as well. But yes, I think its possible that’s just part of its data. Presumably it was trained using a lot available Chinese documents, and possibly official Party documents include such statements often enough for it to internalize them as part of responses on related topics.

It could also have been intentionally trained that way. It could be using a combination of methods. All these chatbots are censored in some ways, otherwise they could tell you how to make illegal things or plan illegal acts. I’ve also seen so many joke/fake DeepSeek outputs in the last 2 days that I’m taking any screenshots with extra salt.

Zero context to this…

My experience with Deepseek R1 is that it’s quite “unbound” by itself, but the chat UI (and maybe the API? Not 100% sure about that) does seem to be more aligned.

All the open Chinese LLMs have been like this, rambling on about Tiananmen Square as much as they can, especially if you ask in English. The devs seem to like “having their cake and eating it,” complying with the govt through the most publicly visible portals while letting the model rip underneath.